| |

Pollsters Repair Image with UK Election Call

Britain's pollsters did well in the General Election campaign, with some individual pollsters and the overall average predicting actual vote share very accurately. All underestimated the scale of the Conservative majority, seats still being harder to call than votes, but the science of the latter is also developing.

The Background

A number of recent national votes in the UK have seen pollsters generally slip up - as they did in the US presidential race in 2016 - and this has led to much scrutiny of methods by the researchers themselves, and much scrutiny of pollsters by others. In the 2015 election, ten out of 11 final polls suggested that Labour and the Tories were neck-and-neck, which would have resulted in a hung parliament, while in fact the Conservatives came out with a small majority: the BBC exit poll (Tories 316, Labour 239) proved to be fairly accurate, with the final outcome Tories 331 and Labour 232. In the days following, the British Polling Council, supported by the MRS, set up an independent enquiry into the perceived failure, and this later concluded that firms systematically over-represented Labour supporters and under-represented Conservative supporters in their samples. The MRS subsequently issued two new sets of guidelines, in June 2016.

Most pollsters then missed the result of the EU Referendum in June 2016, naming Remain as the likely winner although frequently describing the outcome as 'too close to call'. The choice here was unprecedented - there were no 'swings' to be forecast or measured - and normal party lines were broken utterly, as DRNO pointed out in its analysis. A year later came another unexpected result, with Teresa May losing her majority when a larger one had been forecast, and here it seemed the adjustments made after the previous election were in fact the key reason for the errors - polls would have been relatively accurate if they had not factored in 'Shy tories' and the tendency of young voters to stay at home, as Peter Kellner pointed out in an article for the Evening Standard. We asked 'Who'd be a pollster?' but finished by concluding the profession had a bright future.

2019 Results

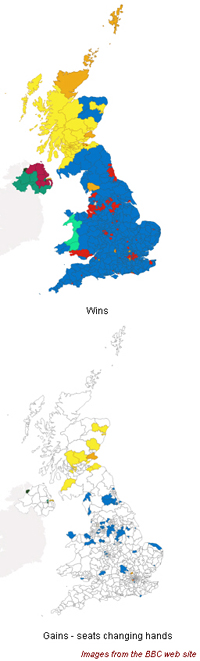

And so to 2019, which may or may not have been the 'Brexit Election', depending on which warring Labour party faction you believe. This time it was harder to identify an overall shift in intention - put another way, more people may have made up their minds in advance. Mid-campaign, the Conservative lead grew to around 15% in some polls, but towards the end it shrank back to around 10%, where it had been soon after the start of the six week period. Final poll results for most of the companies are shown below, image and analysis courtesy of the BBC to whom many thanks. Compare these with the final result of Conservatives 43.6%, Labour 32.2% and Lib Dem 11.5%.

Kantar's last poll was apparently spot-on for the two main parties, but more to the point, several agencies within 1-2% on all counts, including the margin.

Opinium, who produced much the best late-breaking prediction in 2016 (they called the Brexit vote for Leave, by 2-7 points, with the final result 3.8%), was one of those homing in on roughly the right numbers by the end this time, but came a different route, at one point being flagged up as the outrider with a Tory margin of 19% and reporting that as dropping slowly towards polling day; while YouGov at one point scored the lead at 17 points, and saw it drop below 10% with a week or two to go, then recover slightly.

'There is a part of you which has concerns when you are consistently showing larger (or smaller) leads than the industry average', Opinium's Head of Omnibus James Crouch tells DRNO. 'But, you do have to have trust that the methodology stands up until proven otherwise. If you don't trust it, why go with it in the first place?' Crouch says the firm's methodology review earlier this year 'returned to the basic principle that our samples were not always representative of the people who go and vote', and believes changes in its numbers largely reflected the path taken by undecided voters: 'By identifying the obvious differences between our online samples and what we know from the real-world electorate, we were able to make several corrections that we consider important to improving the accuracy of our polling: rethinking what the most relevant demographic factors for driving voting intention actually are, correcting for false recall of past votes, and remembering we had to get the demographic make-up of the electorate right, not just the resident population.

'The ultimate effect of many of these was probably to increase the role of don't knows and non-committed voters above what you might expect of samples derived from online panels. As the Tories had a huge lead amongst those who were already decided in advance of the campaign, it's not surprising we showed larger leads in the earlier stages, but we also managed to get the late deciders in roughly the right proportion to end up with the correct outcome'.

This suggests that those making up their mind late often chose Labour, which both ties in with demographic stats (first-time and younger voters might be expected to decide later on in the process than those with long-established views and voting behaviour), and with what was reported by other polling companies who focused less on the level of commitment to getting out and voting.

Overall, Crouch says the election was 'a great success for the polling industry. Every company had a final poll which indicated a Conservative majority, even if the range was quite wide. The 2015 through to 2017 general elections were a perfect storm for the polling industry and 2017 especially made it clear that we should be careful not to learn the wrong lessons from previous elections. This time the wider margin of victory did make it easier for the industry to show different leads while broadly still telling the same story, but we appear to have learnt from many of the mistakes of 2017 as well'.

Seat Prediction

YouGov's vote share predictions proved reasonably accurate, but it was their MRP methodology, which predicts the number of seats in Parliament, which garnered most press attention, partly because of its success in the 2017 election. This time it proved less prophetic, but CEO and Founder Stephan Shakespeare, suggests that the strong interest paid by the press and political parties themselves may actually have helped to drive results in opposite directions. 'For the first time', he tells DRNO, 'our MRP Polling was used explicitly by campaigns to influence tactical voting. It's hard to say how this changed outcomes, if at all, but one thing is clear: when our first MRP came out showing a big majority for the Conservatives (by 68, which was a surprise) it was followed by a rise for Labour, and when our second came out showing it had narrowed (to a lead of just 18 seats), the Conservatives campaigned hard on it being 'neck and neck' and the Conservative vote went back up. Clearly there were many voters who feared a Corbyn win'.

MRP results were published just twice, but the analysis was run daily, and the last of them had the majority back up to 46, as discussed by Ben Lauderdale of UCL, YouGov's partner in the calculations (https://twitter.com/benlauderdale/status/1205485325642010625?s=12 ). Shakespeare says that 'throughout', YouGov 'showed the distribution of relative performance pretty precisely, something never expected from polls', adding 'we're very pleased with the model'. 93% of constituencies were predicted correctly.

Just as it proved a bad idea to drastically revise methods after the 2015 miss, we should avoid drawing too many conclusions from the success of polls this time round. Opinium's James Crouch concludes: 'The main lesson to be learned from the last four years is that there is no single answer that holds across elections. If we under- or over-estimate one party's vote share in a general election, it should not be taken for granted that the cause behind this will manifest itself in the same way next time around. For example, the industry's inability to spot a clear gap between the Conservatives and Labour in 2015 was probably not down to endemic underestimation of the Conservative vote, but underestimating some demographic groups that David Cameron won over. We tended to focus on the former rather than the latter, and that is a mistake we avoided this time around and should definitely not make in future. In short, every election is different, and we should shake the habit of fighting the last election all over again'.

Fatigue?

So is that it for another 5 years? Maybe, this time - the size of the majority suggests we are at any rate less likely to see Parliament grinding to a halt - the immediate cause of this one. We'll have a rest from general elections, after four national votes in five years, but meanwhile levels of interest in politics haven't been this high for some time. 2019 turnout, at 67.3%, was very slightly down on the 2017 election but still higher than any other election since 1997. Not much evidence of election fatigue, but please let's not confuse this with campaign fatigue - can we shorten them to three weeks in future please? And while we're at it, limit the Liberal Democrats to no more than 15 election communications per vote per household (19 received here, 37 in some constituencies apparently).

Pollsters I'm sure won't rest on their laurels, and can continue to provide feedback on individual policies and choice, as well as overall voter support - but their revisions after this experience are likely to be healthy, scientific tinkering rather than a kitchen sink approach, which is good news. Pat on the back - and back to work.

Nick Thomas

|